The refreshed Open AI Agent Builder beta now lives under the broader AgentKit umbrella. AgentKit bundles orchestration, governance, and UI pieces into one stack, and the canvas remains an efficient way to sketch an agent before committing to TypeScript. The notes below capture what is new in practice and where VoltAgent fits once a prototype needs to ship.

What Agent Builder Ships

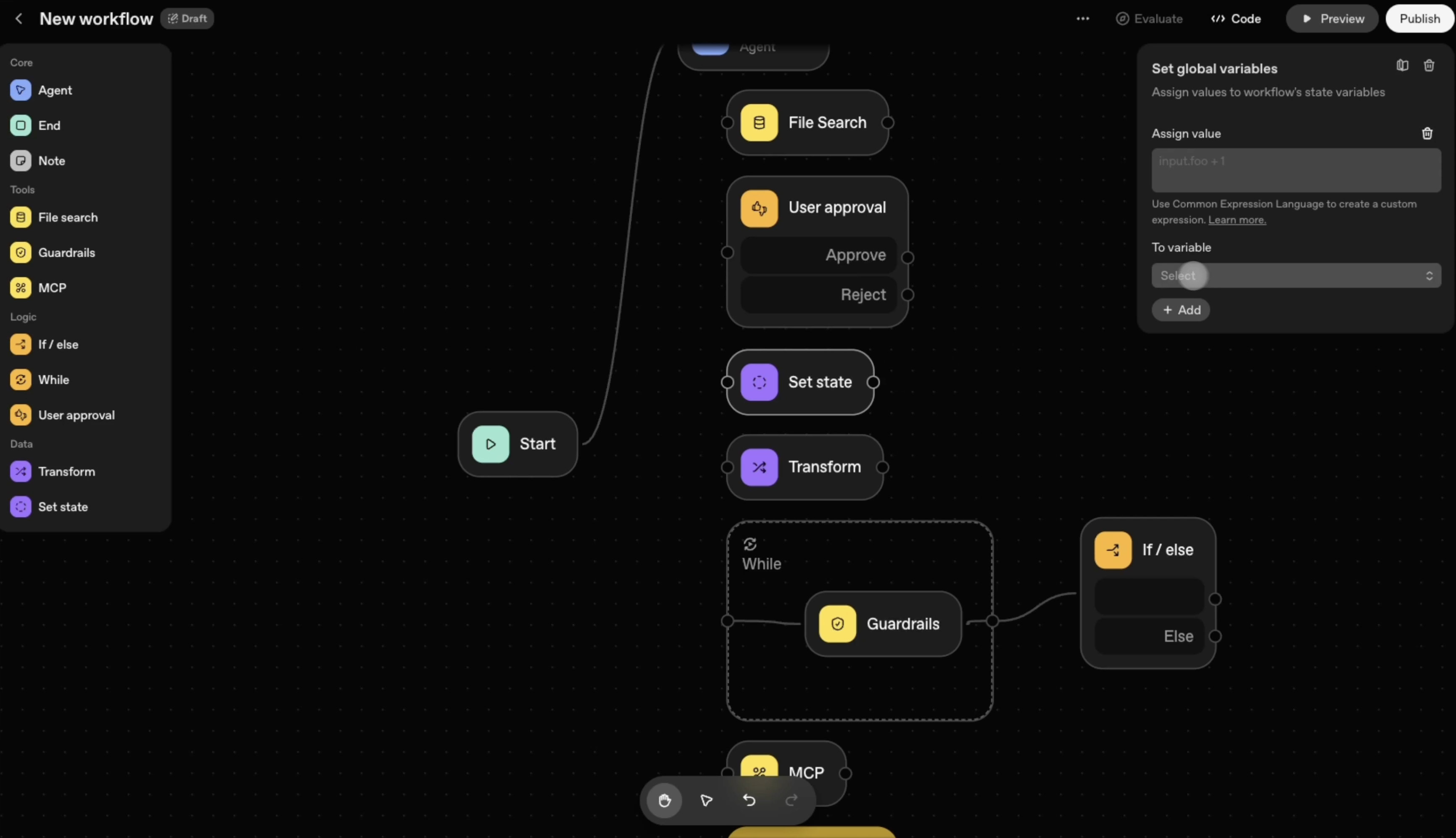

The core is still that canvas: nodes are steps, edges are transitions. In common flows such as support triage bots or data lookup assistants, teams start with an input node, branch on logic, call tools or models, optionally read or write memory, and exit through an output node. Each block has a focused config panel, and the preview runner continues to be the quickest way to validate behavior before anyone touches code.

- Input: Receives user messages or event payloads.

- Logic: Branches on booleans or pattern matches and routes control flow.

- Tool Call: Invokes external functions or APIs through a protocol boundary.

- LLM: Sends a prompt to a selected model and returns structured or free form output.

- Memory: Reads and writes scoped state for later steps.

- Output: Produces the terminal response or emits an event.

Beyond the node palette, the builder now emphasizes versioned workflows. Each publish creates a snapshot that can be pinned in ChatKit or exported as SDK code. Preview runs show trace by trace data, and the Evaluate tab lets teams run trace graders without leaving the canvas. Templates across support, research, and internal automation remain the fastest starting point when a working layout beats a blank screen.

In practice, this shape works well for support bots, data lookups, and lightweight automations, the kind of flows people prefer to see end to end on one screen.

AgentKit Adds More Building Blocks

AgentKit positions the builder as one pillar in a package that covers orchestration, observability, and deployment. Three parts stand out when piecing together flows:

- Connector Registry stabilizes the security posture. It is a single admin surface where ChatGPT workspaces and API organizations map to data sources like Google Drive, SharePoint, Microsoft Teams, Dropbox, plus any MCP connector the admin approves. No separate spreadsheet of systems is required.

- ChatKit handles the front end. It takes a published workflow ID, manages threads, streams responses, and shows the agent thinking indicator without rebuilding the chrome yet again. Version swaps happen server side, which keeps the review loop fast.

- Guardrails provides the safety layer. Whether mounted inside the builder or alongside a VoltAgent runtime, it offers jailbreak checks, PII masking, and policy hooks that fit into the node graph.

Taken together, AgentKit handles proof of concept graphing, the UI surface, and the compliance checklist larger organizations need. VoltAgent steps in when teams want handwritten orchestration, custom memory strategies, or the option to mix model providers beyond what the hosted stack offers.

Integration Points

On integrations, Agent Builder still connects to the OpenAI stack. MCP handles typed tool calls, ChatKit provides UI components, the editor taps directly into OpenAI APIs, and there is a one step deploy to the hosted runtime. In practice that means less glue code between a sketch and something teammates can click.

Publishing yields a versioned ID. Teams can keep an early version pinned for production while experimenting with a branch in preview, then roll forward only after the built in graders pass. If there is a need to own the runtime, the builder will export TypeScript that mirrors the graph so the workflow can move into VoltAgent or another orchestrator.

Guardrails and Constraints

On the safety side, the runtime supports constraints to contain behavior:

- Deny lists for specific data sources or HTTP targets.

- Approval gates that pause execution until a user confirms an action.

- Response checks that reject outputs that violate a content policy.

I like that constraints sit in the graph like regular nodes, which makes them easy to reason about during testing and easy to diff during reviews.

Evaluate and Improve

OpenAI’s latest update adds evaluation-first tooling into the same workflow. Teams can attach a curated set of traces, run the automated graders, and tweak prompts without leaving the canvas. For trickier problems it is possible to lean on reinforcement fine tuning. RFT is generally available on o4-mini and in private beta for GPT-5, with custom graders and tool call supervision to teach the model when to reach for internal systems. The feedback loop is fast enough for weekly iteration instead of quarterly rewrites.

So

If you’re starting cold, the templates help. Today’s set covers support, content generation, research, and internal automations. They’re regular flows you can edit, extend, or throw away, which keeps them useful as scaffolds rather than prescriptions.

VoltAgent is an open-source TypeScript framework for orchestrating AI agents. It gives you control over workflows, sub-agents, memory adapters, and observability. VoltAgent is built for production with support for retries, tracing, error handling, and type safety. You can integrate it with any front end or use it behind no-code tools. It works with OpenAI, Claude, and other LLM providers.

- GitHub repo: VoltAgent/voltagent

- Examples & templates: VoltAgent Examples

Also part of the VoltOps ecosystem: VoltOps handles deployment, monitoring, and operations around VoltAgent agents.

As AgentKit matures, VoltAgent stays the option when teams need richer customization, multi provider routing, or on premise deployments. Draft flows in the builder, export them, and evolve the logic in VoltAgent while stakeholders continue collaborating on the visual canvas.